DevOps Meetup #13

CIMB

16th July 2019

1000+ members

Who we are

Volunteers (hangs around EngineersMY slack)

https://engineers.my/

Join us!

Monthly meetup announced on meetup.com

Get in touch via meetup.com

or

Slack us to volunteer / speak / sponsor

Other meetups

DevKami curated meetups: https://devkami.com/meetups/

KL meetups by Azuan (@alienxp03): http://malaysia.herokuapp.com/#upcoming

House rules

- Minimal bikeshedding

- Participate!

- Respect opinions — agree to disagree!

- Thank the organizers & sponsors!

Buzz Corner

July 2nd: Cloudflare Outage

Postmortem | Incident Page502 Errors

Major outage impacted all Cloudflare services globally. We saw a massive spike in CPU that caused primary and secondary systems to fall over. We shut down the process that was causing the CPU spike.

Service restored to normal within ~30 minutes. We’re now investigating the root cause of what happened.

Bad Config Deploy

On July 2, we deployed a new rule in our WAF Managed Rules that caused CPUs to become exhausted on every CPU core that handles HTTP/HTTPS traffic on the Cloudflare network worldwide.

…update contained a regular expression that backtracked enormously and exhausted CPU used for HTTP/HTTPS serving

July 2nd: GCloud Network Issues

- Google Cloud networking issues in us-east-1

- physical damage to multiple concurrent fiber bundles serving network paths in us-east1

Incident Page

July 4th: FB, IG and WhatsApp are down in Malaysia, worldwide

US celebrates Independence Day by liberating people from social media slavery

…kidding

MalayMailJuly 5th: Apple iCloud Experiencing Issues

HNJuly 11th: Twitter outage

Status PageIBM acquires RedHat

$34bil acquisition

Articlelazydocker

A simple terminal UI for both docker and docker-compose

GitHub

Linus Torvalds: Lots of Hardware Headaches Ahead

- steady stream of patches being generated as new cybersecurity issues related to the speculative execution model that Intel and other processor vendors rely on

- Each of those bugs requires another patch to the Linux kernel that, depending on when they arrive, can require painful updates to the kernel

- Trade-offs such as reduction of application performance by about 15%

- as processor vendors approach the limits of Moore’s Law, many developers will need to reoptimize their code to continue achieving increased performance

- that requirement will be a shock to many development teams that have counted on those performance improvements to make up for inefficient coding processes

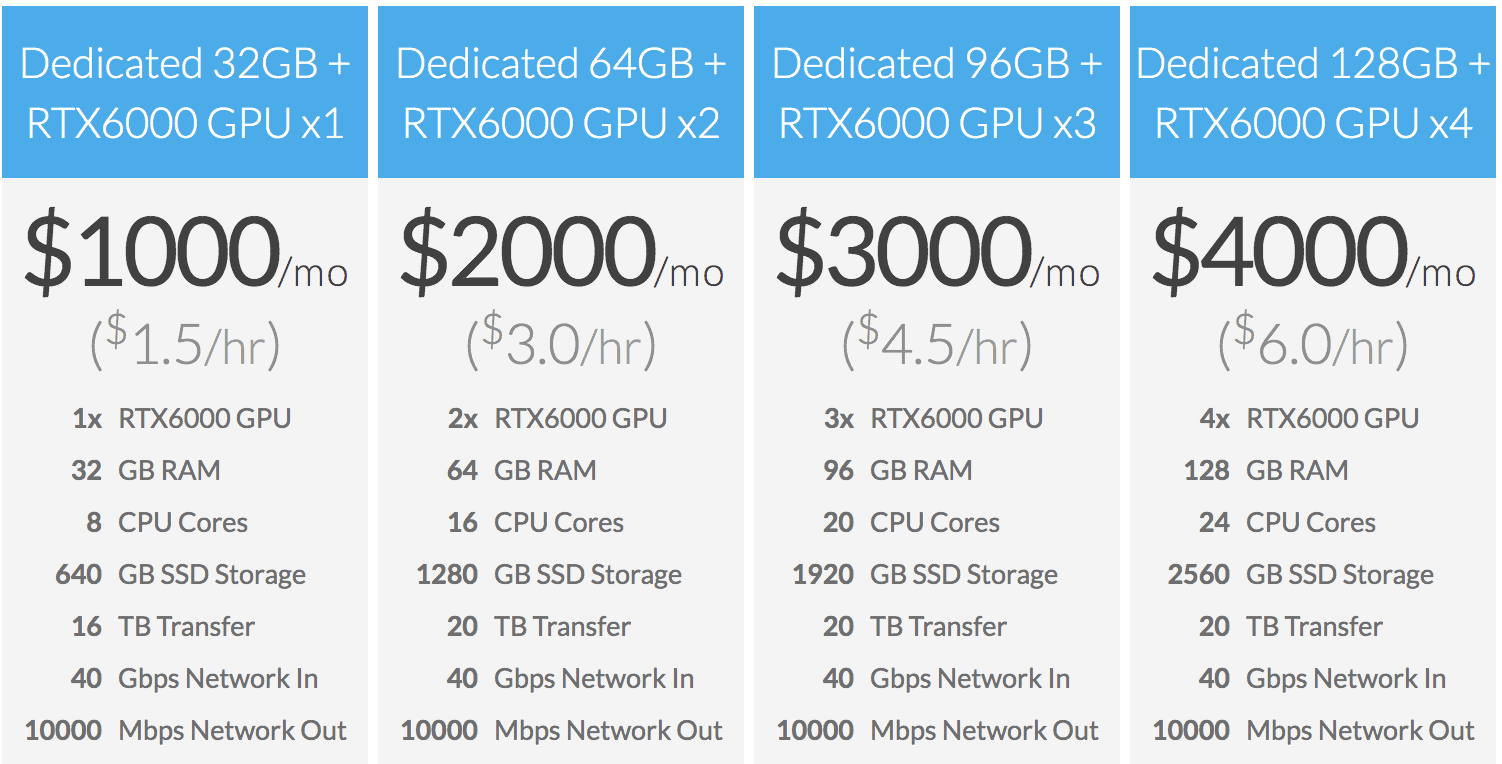

Linode: Introducing GPU Instances

Blog

Windows: Logging Made Easy

Article | GitHubAnnoying state of lambda observability

ArticleStrategies boil down to either:

- Send telemetry directly to external observability tools during Lambda execution

- Scrape or trigger off the telemetry sent to CloudWatch and X-Ray to populate external providers

Send

Send

Pros

- No additional infrastructure is required within AWS, since the telemetry is sent directly to the provider.

- Telemetry is low latency. As soon as the Lambda function returns, we can feel secure that our events are being processed by our provider.

- No additional cost is incurred to process events after the fact

Send

Cons

- telemetry must either be sent across the network as events occur or batched into a report sent at the conclusion of the function’s execution (but before returning success)

- users must be comfortable either losing some of their events, or pay a latency penalty to send telemetry on every single Lambda invocation

Scrape

Scrape

The most common approach to bypass the per-invocation performance penalty of the “Send” approach is to instead “Scrape” CloudWatch and X-Ray to gather metrics/logs/traces into your provider of choice.

Pro: users are able to save latency Lambda invocations

Con: build (potentially expensive) Rube Goldberg style machines to relay and scrape logs and traces from AWS’s products

Both approach not ideal

…rather than force users to build elaborate systems to “scrape” or IPC messages from inside Lambda functions, AWS could provide some type of UDP listening agent on each Lambda host — these agents could perform a similar function to the existing X-Ray agent, but rather than send events to AWS’s X-Ray service, forward them to a customer owned Kinesis Stream. Maybe even call them Lambda Event Streams